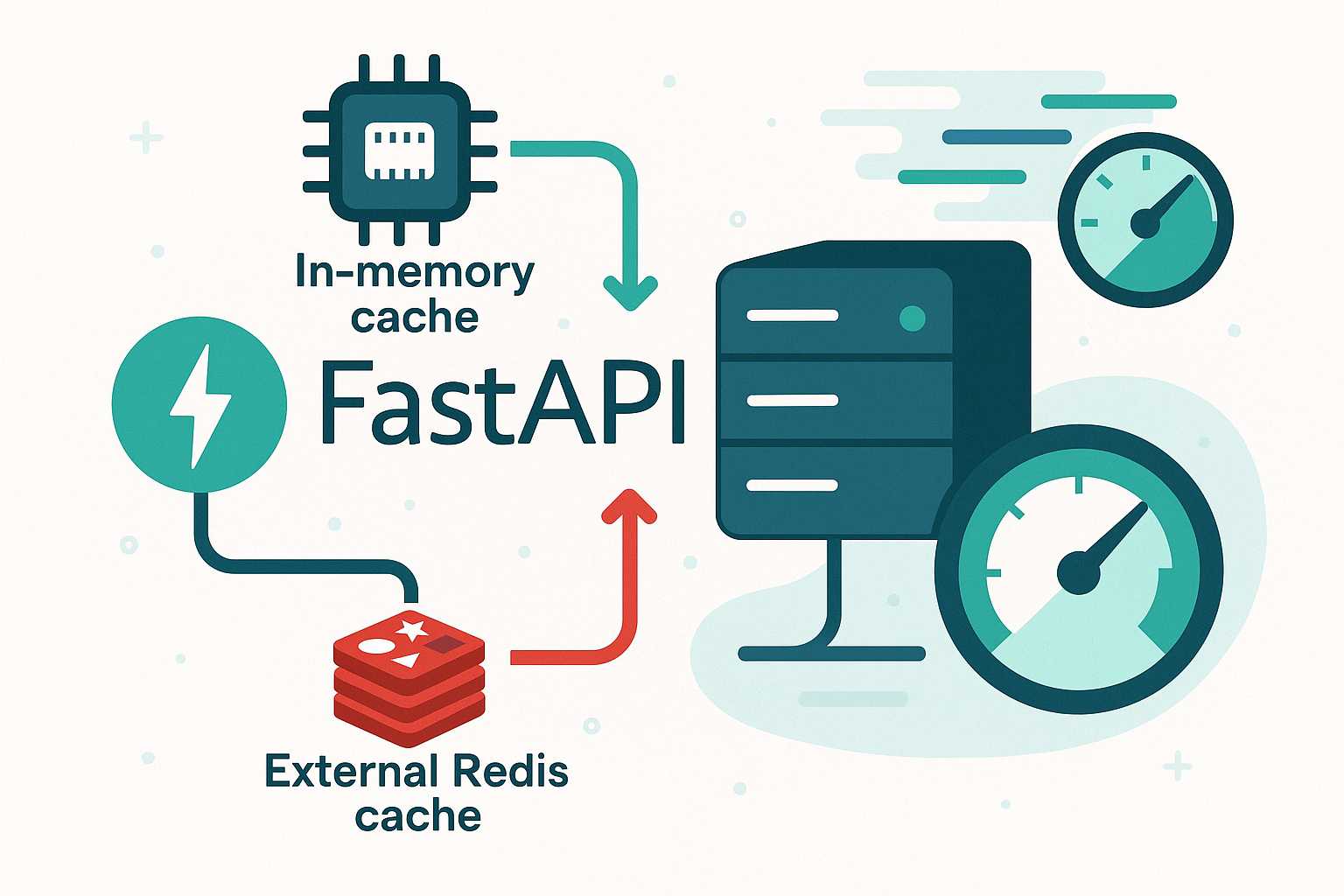

When building APIs with FastAPI, performance and responsiveness are paramount, especially as your application scales. One tried-and-tested way to boost speed and decrease database or computation load is by implementing caching. In this article, I’ll walk you through practical caching strategies for FastAPI applications, highlighting both in-memory and external solutions.

Why Cache?

Caching is all about storing the results of expensive operations—like database queries or computation-heavy tasks—so that identical subsequent requests are served faster. Proper caching can dramatically reduce latency and increase throughput.

In-Memory Caching with lru_cache

For lightweight, in-process APIs, FastAPI works well with Python’s built-in functools.lru_cache decorator. This is particularly useful for pure functions with a limited set of parameters.

from functools import lru_cache

@lru_cache(maxsize=128)

def get_expensive_data(param: str):

# Imagine an expensive operation here

return external_service_query(param)

@app.get("/cached-data/{param}")

def read_cached_data(param: str):

return {"result": get_expensive_data(param)}

Note: lru_cache is great for single-process servers, but not ideal for applications running across multiple processes or machines (e.g., behind Gunicorn or Uvicorn workers).

External Caching: Redis

For production deployments, using an external cache like Redis is the way to go. It allows all instances of your FastAPI app to share cached data, supporting more robust scaling.

First, install a Redis client:

pip install aioredis

Then, set up your cache logic:

import aioredis

from fastapi import FastAPI, Depends

app = FastAPI()

async def get_redis():

redis = await aioredis.from_url("redis://localhost", encoding="utf-8", decode_responses=True)

try:

yield redis

finally:

await redis.close()

@app.get("/cached/{item_id}")

async def read_item(item_id: str, redis = Depends(get_redis)):

cache_key = f"item:{item_id}"

cached = await redis.get(cache_key)

if cached:

return {"item": cached, "cached": True}

# Simulate an expensive operation

item = get_expensive_item(item_id)

await redis.set(cache_key, item, ex=60) # cache for 60s

return {"item": item, "cached": False}

Cache Invalidation

Proper invalidation ensures your cache never serves stale data. Common strategies include setting expiration times (ex parameter in Redis) or explicitly deleting keys when data changes.

Conclusion

Adding caching to your FastAPI app—whether in-memory or external—yields huge performance gains. Start with lru_cache for simple cases and transition to Redis (or similar) as you scale. Happy coding!

—Fast Eddy

Leave a Reply