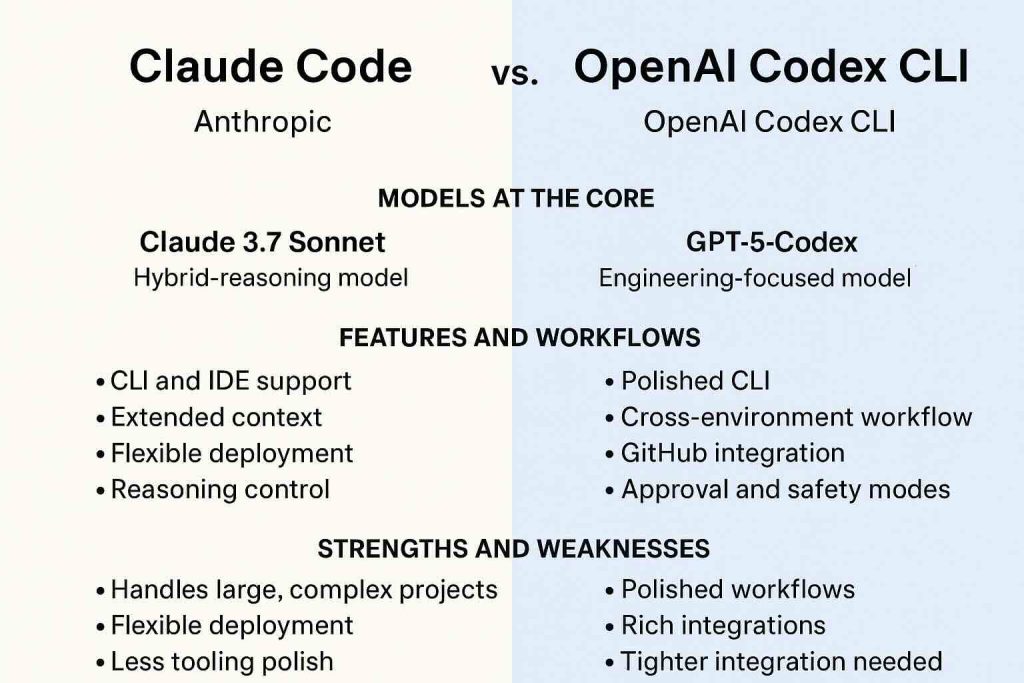

The rise of AI developer agents has shifted from novelty to necessity. What started as autocomplete tools has evolved into full-fledged teammates that can refactor codebases, review pull requests, run tests, and even manage development workflows end-to-end. Two of the leading contenders in this space are Claude Code (powered by Claude’s newer models) and OpenAI Codex CLI (now using GPT-5-Codex).

Below is a comparison of their architectures, workflows, strengths & weaknesses, and when each is likely the better fit—now updated to include Claude’s Opus line and precise release dates.

Release Timeline: Key Models

| Model | Release / Announcement Date | Notes |

|---|---|---|

| Claude 3.7 Sonnet | February 24, 2025(Reuters) | Introduced hybrid reasoning (“extended thinking mode”) able to toggle between quick responses and more careful reasoning. Claude Code (agentic CLI) previewed around the same time. (Reuters) |

| Claude 4 family (Sonnet 4 & Opus 4) | May 22-23, 2025(PromptLayer) | The Claude-4 models bring further improvements in reasoning, context, tool/image support, and agentic capabilities for general tasks. Opus 4 is the “flagship / more capable” variant in the Claude 4 line. (Anthropic) |

| Claude Opus 4.1 | August 5, 2025 (Anthropic) | An incremental but significant update to Opus 4: better performance on coding (especially large, multi-file refactors), reasoning, etc. Available via paid Claude, Claude Code, and APIs. (Anthropic) |

| GPT-5-Codex (OpenAI Codex CLI / Codex extension) | September 15, 2025(announcement in model release notes) (OpenAI Help Center) | A variant of GPT-5 optimized for agentic coding in Codex workflows: CLI, IDE extension, cloud tasks, code reviews, etc. (OpenAI) |

What Is Claude Opus, and How Does It Fit In?

Before diving into the detailed feature comparison, it’s helpful to clarify where Claude Opus fits in with respect to Claude Sonnet and other Claude models.

- Claude Opus is Anthropic’s top-tier (“flagship”) general-purpose model in the Claude family. It is intended to deliver the highest capability in reasoning, coding, agentic workflows, and broader general tasks.

- Claude Sonnet variants (e.g. 3.7 Sonnet, Sonnet 4) tend to optimize for balance: strong reasoning and coding support, but with perhaps somewhat less raw capacity (and less “risk level”) relative to Opus.

- With Claude 4, both Sonnet 4 and Opus 4 were released simultaneously as part of a model-family update, with Opus 4 being more capable on demanding tasks. Similarly, Opus 4.1 is an upgrade over Opus 4.

- Claude Code uses these models: when you use Claude Code in its agentic coding workflows, you can access Opus (for higher capability) or Sonnet (for balanced performance) depending on plan / permissions.

So, Opus is not necessarily “less advanced” — it is in many respects more advanced, especially for demanding reasoning and coding tasks. The trade-offs are often cost, latency, resource usage, and risk/security posture.

Here’s a side-by-side comparison in article format, updated with Opus in the picture.

Models at the Core

Claude Code is powered by models in the Claude line: notably Claude 3.7 Sonnet (released February 24, 2025), followed by the Claude 4 family (Opus 4 & Sonnet 4) in May 2025, and then Opus 4.1 in August 2025, which is its current top-tier model for high performance. (Google Cloud)

OpenAI’s toolset for its Codex CLI and extensions is now built around GPT-5-Codex, officially introduced September 15, 2025. This model is fine-tuned for agentic engineering workflows: code reviews, large-scale refactors, running tests, working across environments. (OpenAI Help Center)

What They Bring: Features & Workflows

| Feature Area | Claude Code (latest, with Opus / Sonnet) | OpenAI Codex CLI (with GPT-5-Codex) |

|---|---|---|

| Coding & Agentic Tasks | Opus 4.1 and Sonnet 4 support large refactors, multi-file coding workflows. Claude Code allows developers to delegate substantial coding tasks, editing, testing, etc., directly via the CLI. Using Opus gives stronger capability on complex reasoning and bug-finding. (Anthropic) | GPT-5-Codex emphasizes real-world software engineering tasks: building full projects, code review, debugging, long tasks, etc. The CLI has been rebuilt to support images/screenshots, cross-environment workflows, and better efficiency on both “light” and “heavy” tasks. (OpenAI) |

| Context / Reasoning Depth | With models like Sonnet 3.7, Sonnet 4, Opus 4 / 4.1, Anthropic supports “extended thinking” / hybrid reasoning (step-by-step option). Large token windows, memory / state persistence help for big codebases. (Google Cloud) | GPT-5-Codex similarly supports varying reasoning depth: quick responses for simple tasks, more thoughtful for complex ones. It also supports working with images/screenshots to supplement code or UI context. (OpenAI) |

| Permissions, Safety, Deployment Flexibility | Anthropic provides access via API, Claude Code, paid plans, across clouds (Bedrock, Vertex AI etc.), gives users options in model (Sonnet vs Opus) with associated cost/safety trade-offs. Opus models often come with stricter safety levels (higher risk, higher capability). (Anthropic) | OpenAI’s GPT-5-Codex in Codex CLI/IDE comes with approval modes (read-only, auto, full access), permission settings, sandboxing, designed to work across local/cloud, with safety / oversight in workflows. (OpenAI) |

Strengths & Weaknesses (Updated with Opus)

- Top-tier reasoning and deep capability with Opus 4.1, which shines on very complex, multi-file or multi-module problems.

- Model choice: you can pick between Sonnet (a bit more lightweight, cost-efficient) and Opus (for when capability matters most).

- Deployment flexibility: APIs, cloud platforms, etc., often with choice of cloud, region, plan.

- Strong support for large context windows, memory / persistence, and “extended thinking” modes.

Weaknesses or Trade-Offs:

- Using Opus models typically means more computational cost, possibly higher latency.

- Might require more careful prompt engineering for complex tasks; risk / safety oversight may be stricter, policies tighter.

- For users with limited resources / budgets, the overhead of using high-capability models may not justify the gains for simpler tasks.

On the other side, Codex CLI with GPT-5-Codex continues to be strong for polished workflows: tight integration with GitHub, IDEs, standardized code review, good cross-environment support. The polish, reliability, and developer tooling are very high. But OpenAI’s models also have costs, infrastructure dependencies, and potential limitations for users who need maximum control over cloud deployment or model safety / compliance in high-risk environments.

When to Use Which, Now

| Scenario | Best Fit |

|---|---|

| You have large, complex codebases, need high-capacity reasoning, or want the very top model performance (at cost) | Claude Opus 4.1 (via Claude Code) is likely your best bet. |

| You want balanced performance, lower latency, cost efficiency, still good reasoning and tooling | Claude Sonnet 4 or even Sonnet 3.7** might suffice. |

| You prioritize tooling, smooth developer experience, integrated workflows (IDE, GitHub), polished agentic features with good safety / permissions | OpenAI Codex CLI with GPT-5-Codex is very strong in these areas. |

Summary

- Claude Opus is not less advanced — in fact, it is among the most advanced in Anthropic’s offerings, especially for demanding engineering tasks. It’s part of their “Opus” line, which is meant to push the envelope, whereas “Sonnet” balances performance, cost, and speed.

- The latest models (Claude Sonnet 3.7 in February 2025; Claude Opus 4 / Sonnet 4 in May 2025; Opus 4.1 in August 2025; GPT-5-Codex in September 2025) show rapid progress from both Anthropic and OpenAI.

Leave a Reply